BID DATA AND DEEP LEARNING INTRODUCTION

Big data analytics examines large amounts of data to uncover hidden patterns, correlations and other insights. With today’s technology, it’s possible to analyze your data and get answers from it almost immediately – an effort that’s slower and less efficient with more traditional business intelligence solutions.

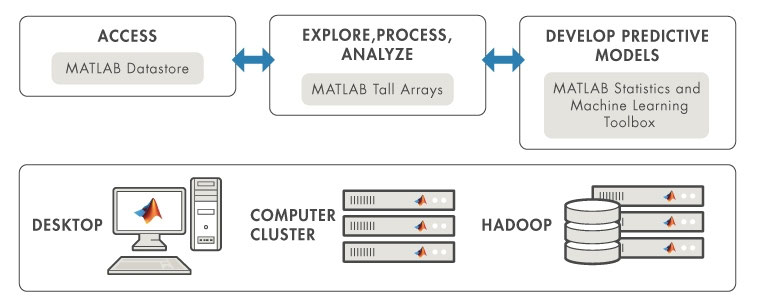

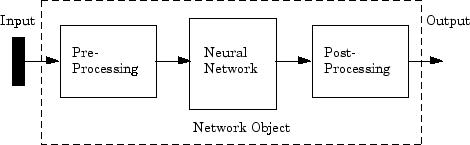

MATLAB provides a single, high-performance environment for working with big data represented in the following scheme:

.

Use MATLAB datastores to access data that normally does not fit into the memory of a single computer. Datastores support a variety of data types and storage systems.

Explore, clean, process, and gain insight from big data using hundreds of data manipulation, mathematical, and statistical functions in MATLAB.

Tall arrays allow you to apply statistics, machine learning , and visualization tools to data that does not fit in memory. Distributed arrays allow you to apply math and matrix operations on data that fits into the aggregate memory of a compute cluster. Both tall arrays and distributed arrays allow you to use the same functions that you’re already familiar with.

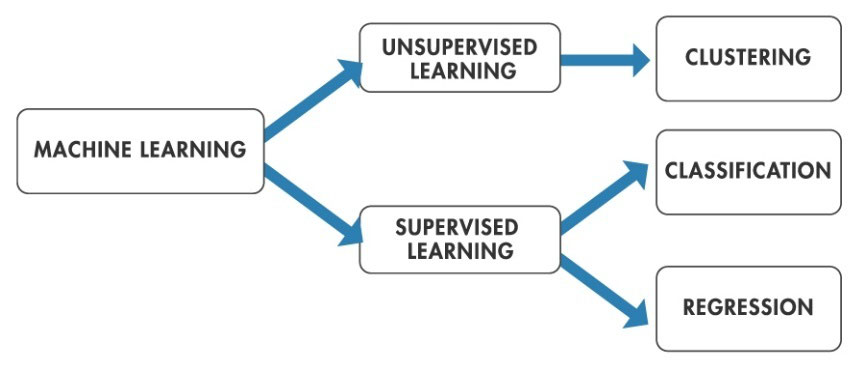

Use advanced mathematics and machine learning algorithms in MATLAB to perform unsupervised and supervised learning with big data.

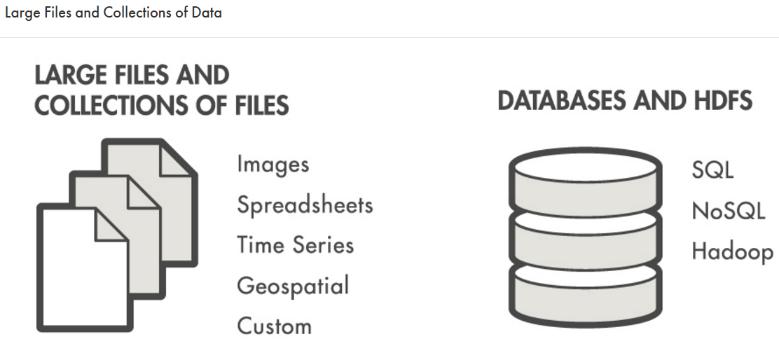

Access and analyze big data with MATLAB using your existing IT systems and processes, including:

Desktop PC with local disk and fileshares

SQL and NoSQL databases

Hadoop, HDFS, and Spark

You can also deploy analytics in interactive, streaming, and batch applications royalty-free.

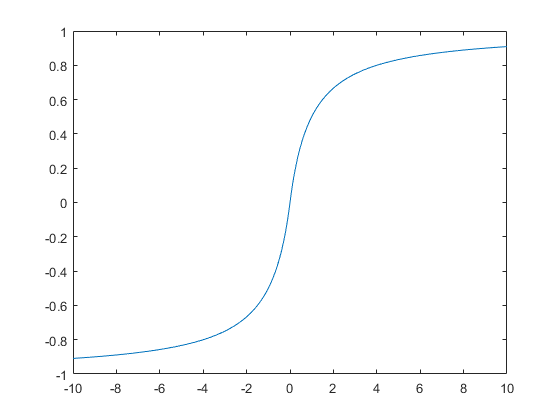

Deep learning (also known as deep structured learning , hierarchical learning or deep machine learning ) is a branch of machine learning based on a set of algorithms that attempt to model high level abstractions in data. In a simple case, there might be two sets of neurons: ones that receive an input signal and ones that send an output signal. When the input layer receives an input it passes on a modified version of the input to the next layer. In a deep network, there are many layers between the input and output (and the layers are not made of neurons but it can help to think of it that way), allowing the algorithm to use multiple processing layers, composed of multiple linear and non- linear transformations .

Deep learning is part of a broader family of machine learning methods based on learning representations of data. An observation (e.g., an image) can be represented in many ways such as a vector of intensity values per pixel, or in a more abstract way as a set of edges, regions of particular shape, etc. Some representations are better than others at simplifying the learning task (e.g., face recognition or facial expression recognition). One of the promises of deep learning is replacing handcrafted features with efficient algorithms for unsupervised or semi-supervised feature learning and hierarchical feature extraction. Research in this area attempts to make better representations and create models to learn these representations from large-scale unlabeled data. Some of the representations are inspired by advances in neuroscience and are loosely based on interpretation of information processing and communication patterns in a nervous system, such as neural coding which attempts to define a relationship between various stimuli and associated neuronal responses in the brain.

Various deep learning architectures such as deep neural networks, convolutional deep neural networks, deep belief networks and recurrent neural networks have been applied to fields like computer vision, automatic speech recognition, natural language processing, audio recognition and bioinformatics where they have been shown to produce state-of-the-art results on various tasks.

Deep learning has been characterized as a buzzword, or a rebranding of neural networks.

Deep learning is characterized as a class of machine learning algorithms that:

use a cascade of many layers of nonlinear processing units for feature extraction and transformation. Each successive layer uses the output from the previous layer as input. The algorithms may be supervised or unsupervised and applications include pattern analysis (unsupervised) and classification (supervised).

are based on the (unsupervised) learning of multiple levels of features or representations of the data. Higher level features are derived from lower level features to form a hierarchical representation.

are part of the broader machine learning field of learning representations of data.

learn multiple levels of representations that correspond to different levels of abstraction; the levels form a hierarchy of concepts.

These definitions have in common multiple layers of nonlinear processing units and the supervised or unsupervised learning of feature representations in each layer, with the layers forming a hierarchy from low-level to high-level features. The composition of a layer of nonlinear processing units used in a deep learning algorithm depends on the problem to be solved. Layers that have been used in deep learning include hidden layers of an artificial neural network and sets of complicated propositional formulas. They may also include latent variables organized layer-wise in deep generative models such as the nodes in Deep Belief Networks and Deep Boltzmann Machines.

Deep learning algorithms transform their inputs through more layers than shallow learning algorithms. At each layer, the signal is transformed by a processing unit, like an artificial neuron, whose parameters are ‘learned’ through training. A chain of transformations from input to output is a credit assignment path (CAP). CAPs describe potentially causal connections between input and output and may vary in length – for a feedforward neural network, the depth of the CAPs (thus of the network) is the number of hidden layers plus one (as the output layer is also parameterized), but for recurrent neural networks, in which a signal may propagate through a layer more than once, the CAP is potentially unlimited in length. There is no universally agreed upon threshold of depth dividing shallow learning from deep learning, but most researchers in the field agree that deep learning has multiple nonlinear layers (CAP > 2) and Juergen Schmidhuber considers CAP > 10 to be very deep learning.

Deep learning algorithms are based on distributed representations. The underlying assumption behind distributed representations is that observed data are generated by the interactions of factors organized in layers. Deep learning adds the assumption that these layers of factors correspond to levels of abstraction or composition. Varying numbers of layers and layer sizes can be used to provide different amounts of abstraction. Deep learning exploits this idea of hierarchical explanatory factors where higher level, more abstract concepts are learned from the lower level ones. These architectures are often constructed with a greedy layer-by-layer method. Deep learning helps to disentangle these abstractions and pick out which features are useful for learning.

For supervised learning tasks, deep learning methods obviate feature engineering, by translating the data into compact intermediate representations akin to principal components, and derive layered structures which remove redundancy in representation. Many deep learning algorithms are applied to unsupervised learning tasks. This is an important benefit because unlabeled data are usually more abundant than labeled data. Examples of deep structures that can be trained in an unsupervised manner are neural history compressors and deep belief networks.

Some of the most successful deep learning methods involve artificial neural networks. Artificial neural networks are inspired by the 1959 biological model proposed by Nobel laureates David H. Hubel & Torsten Wiesel, who found two types of cells in the primary visual cortex: simple cells and complex cells. Many artificial neural networks can be viewed as cascading models of cell types inspired by these biological observations. Fukushima’s Neocognitron introduced convolutional neural networks partially trained by unsupervised learning with human-directed features in the neural plane. Yann LeCun et al. (1989) applied supervised backpropagation to such architectures. Weng et al. (1992) published convolutional neural networks Cresceptron for 3-D object recognition from images of cluttered scenes and segmentation of such objects from images.

An obvious need for recognizing general 3-D objects is least shift invariance and tolerance to deformation. Max-pooling appeared to be first proposed by Cresceptron to enable the network to tolerate small-to-large deformation in a hierarchical way, while using convolution. Max-pooling helps, but does not guarantee, shift-invariance at the pixel level.

With the advent of the back-propagation algorithm based on automatic differentiation, many researchers tried to train supervised deep artificial neural networks from scratch, initially with little success. Sepp Hochreiter’s diploma thesis of 1991 formally identified the reason for this failure as the vanishing gradient problem, which affects many-layered feedforward networks and recurrent neural networks. Recurrent networks are trained by unfolding them into very deep feedforward networks, where a new layer is created for each time step of an input sequence processed by the network. As errors propagate from layer to layer, they shrink exponentially with the number of layers, impeding the tuning of neuron weights which is based on those errors.

To overcome this problem, several methods were proposed. One is Jürgen Schmidhuber’s multi-level hierarchy of networks (1992) pre-trained one level at a time by unsupervised learning, fine-tuned by backpropagation. Here each level learns a compressed representation of the observations that is fed to the next level.

Another method is the long short-term memory (LSTM) network of Hochreiter & Schmidhuber (1997). In 2009, deep multidimensional LSTM networks won three ICDAR 2009 competitions in connected handwriting recognition, without any prior knowledge about the three languages to be learned. Sven Behnke in 2003 relied only on the sign of the gradient (Rprop) when training his Neural Abstraction Pyramid to solve problems like image reconstruction and face localization.

Other methods also use unsupervised pre-training to structure a neural network, making it first learn generally useful feature detectors. Then the network is trained further by supervised back-propagation to classify labeled data. The deep model of Hinton et al. (2006) involves learning the distribution of a high-level representation using successive layers of binary or real-valued latent variables. It uses a restricted Boltzmann machine (Smolensky, 1986 [87] ) to model each new layer of higher level features. Each new layer guarantees an increase on the lower-bound of the log likelihood of the data, thus improving the model, if trained properly. Once sufficiently many layers have been learned, the deep architecture may be used as a generative model by reproducing the data when sampling down the model (an “ancestral pass”) from the top level feature activations. [88] Hinton reports that his models are effective feature extractors over high-dimensional, structured data.

In 2012, the Google Brain team led by Andrew Ng and Jeff Dean created a neural network that learned to recognize higher-level concepts, such as cats, only from watching unlabeled images taken from YouTube videos.

Other methods rely on the sheer processing power of modern computers, in particular, GPUs. In 2010, Dan Ciresan and colleagues in Jürgen Schmidhuber’s group at the Swiss AI Lab IDSIA showed that despite the above-mentioned “vanishing gradient problem,” the superior processing power of GPUs makes plain back-propagation feasible for deep feedforward neural networks with many layers. The method outperformed all other machine learning techniques on the old, famous MNIST handwritten digits problem of Yann LeCun and colleagues at NYU.

At about the same time, in late 2009, deep learning feedforward networks made inroads into speech recognition, as marked by the NIPS Workshop on Deep Learning for Speech Recognition. Intensive collaborative work between Microsoft Research and University of Toronto researchers demonstrated by mid-2010 in Redmond that deep neural networks interfaced with a hidden Markov model with context-dependent states that define the neural network output layer can drastically reduce errors in large-vocabulary speech recognition tasks such as voice search. The same deep neural net model was shown to scale up to Switchboard tasks about one year later at Microsoft Research Asia. Even earlier, in 2007, LSTM trained by CTC started to get excellent results in certain applications. This method is now widely used, for example, in Google’s greatly improved speech recognition for all smartphone users.

As of 2011, the state of the art in deep learning feedforward networks alternates convolutional layers and max-pooling layers, topped by several fully connected or sparsely connected layer followed by a final classification layer. Training is usually done without any unsupervised pre-training. Since 2011, GPU-based implementations of this approach won many pattern recognition contests, including the IJCNN 2011 Traffic Sign Recognition Competition, the ISBI 2012 Segmentation of neuronal structures in EM stacks challenge, the ImageNet Competition, and others.

Such supervised deep learning methods also were the first artificial pattern recognizers to achieve human-competitive performance on certain tasks.

To overcome the barriers of weak AI represented by deep learning, it is necessary to go beyond deep learning architectures, because biological brains use both shallow and deep circuits as reported by brain anatomy displaying a wide variety of invariance. Weng argued that the brain self-wires largely according to signal statistics and, therefore, a serial cascade cannot catch all major statistical dependencies. ANNs were able to guarantee shift invariance to deal with small and large natural objects in large cluttered scenes, only when invariance extended beyond shift, to all ANN-learned concepts, such as location, type (object class label), scale, lighting. This was realized in Developmental Networks (DNs) whose embodiments are Where-What Networks, WWN-1 (2008) through WWN-7.

A deep neural network (DNN) is an artificial neural network (ANN) with multiple hidden layers of units between the input and output layers. Similar to shallow ANNs, DNNs can model complex non-linear relationships. DNN architectures, e.g., for object detection and parsing, generate compositional models where the object is expressed as a layered composition of image primitives. The extra layers enable composition of features from lower layers, giving the potential of modeling complex data with fewer units than a similarly performing shallow network.

DNNs are typically designed as feedforward networks, but research has very successfully applied recurrent neural networks, especially LSTM, for applications such as language modeling . Convolutional deep neural networks (CNNs) are used in computer vision where their success is well-documented. CNNs also have been applied to acoustic modeling for automatic speech recognition (ASR), where they have shown success over previous models. For simplicity, a look at training DNNs is given here.

A DNN can be discriminatively trained with the standard backpropagation algorithm .

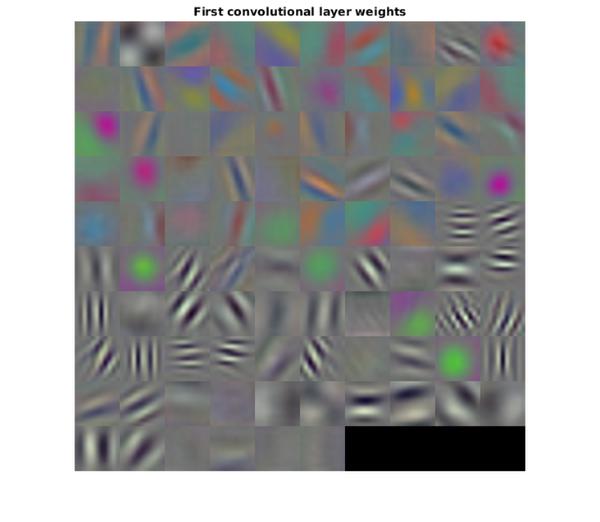

CNNs have become the method of choice for processing visual and other two-dimensional data. A CNN is composed of one or more convolutional layers with fully connected layers (matching those in typical artificial neural networks) on top. It also uses tied weights and pooling layers. In particular, max-pooling is often used in Fukushima’s convolutional architecture. This architecture allows CNNs to take advantage of the 2D structure of input data. In comparison with other deep architectures, convolutional neural networks have shown superior results in both image and speech applications. They can also be trained with standard backpropagation. CNNs are easier to train than other regular, deep, feed-forward neural networks and have many fewer parameters to estimate, making them a highly attractive architecture to use. Examples of applications in Computer Vision include DeepDream. See the main article on Convolutional neural networks for numerous additional references.

A recursive neural network is created by applying the same set of weights recursively over a differentiable graph-like structure, by traversing the structure in topological order. Such networks are typically also trained by the reverse mode of automatic differentiation. They were introduced to learn distributed representations of structure, such as logical terms. A special case of recursive neural networks is the RNN itself whose structure corresponds to a linear chain. Recursive neural networks have been applied to natural language processing. The Recursive Neural Tensor Network uses a tensor-based composition function for all nodes in the tree.

Numerous researchers now use variants of a deep learning RNN called the Long short-term memory (LSTM) network published by Hochreiter & Schmidhuber in 1997. It is a system that unlike traditional RNNs doesn’t have the vanishing gradient problem. LSTM is normally augmented by recurrent gates called forget gates. LSTM RNNs prevent backpropagated errors from vanishing or exploding. Instead errors can flow backwards through unlimited numbers of virtual layers in LSTM RNNs unfolded in space. That is, LSTM can learn “Very Deep Learning” tasks that require memories of events that happened thousands or even millions of discrete time steps ago. Problem-specific LSTM-like topologies can be evolved. LSTM works even when there are long delays, and it can handle signals that have a mix of low and high frequency components.

Today, many applications use stacks of LSTM RNNs and train them by Connectionist Temporal Classification (CTC) to find an RNN weight matrix that maximizes the probability of the label sequences in a training set, given the corresponding input sequences. CTC achieves both alignment and recognition. In 2009, CTC-trained LSTM was the first RNN to win pattern recognition contests, when it won several competitions in connected handwriting recognition. Already in 2003, LSTM started to become competitive with traditional speech recognizers on certain tasks. In 2007, the combination with CTC achieved first good results on speech data. Since then, this approach has revolutionised speech recognition. In 2014, the Chinese search giant Baidu used CTC-trained RNNs to break the Switchboard Hub5’00 speech recognition benchmark, without using any traditional speech processing methods, LSTM also improved large-vocabulary speech recognition, text-to-speech synthesis, also for Google Android, and photo-real talking heads. In 2015, Google’s speech recognition reportedly experienced a dramatic performance jump of 49% through CTC-trained LSTM, which is now available through Google Voice to billions of smartphone users.

LSTM has also become very popular in the field of Natural Language Processing. Unlike previous models based on HMMs and similar concepts, LSTM can learn to recognise context-sensitive languages. LSTM improved machine translation, Language modeling and Multilingual Language Processing. LSTM combined with Convolutional Neural Networks (CNNs) also improved automatic image captioning and a plethora of other applications.

A deep belief network (DBN) is a probabilistic, generative model made up of multiple layers of hidden units. It can be considered a composition of simple learning modules that make up each layer. A DBN can be used to generatively pre-train a DNN by using the learned DBN weights as the initial DNN weights. Back-propagation or other discriminative algorithms can then be applied for fine-tuning of these weights. This is particularly helpful when limited training data are available, because poorly initialized weights can significantly hinder the learned model’s performance. These pre-trained weights are in a region of the weight space that is closer to the optimal weights than are randomly chosen initial weights. This allows for both improved modeling and faster convergence of the fine-tuning phase.

A DBN can be efficiently trained in an unsupervised, layer-by-layer manner, where the layers are typically made of restricted Boltzmann machines (RBM). An RBM is an undirected, generative energy-based model with a “visible” input layer and a hidden layer, and connections between the layers but not within layers. The training method for RBMs proposed by Geoffrey Hinton for use with training “Product of Expert” models is called contrastive divergence (CD). CD provides an approximation to the maximum likelihood method that would ideally be applied for learning the weights of the RBM

A recent achievement in deep learning is the use of convolutional deep belief networks (CDBN). CDBNs have structure very similar to a convolutional neural networks and are trained similar to deep belief networks. Therefore, they exploit the 2D structure of images, like CNNs do, and make use of pre-training like deep belief networks. They provide a generic structure which can be used in many image and signal processing tasks. Recently, many benchmark results on standard image datasets like CIFAR have been obtained using CDBNs.

Large memory storage and retrieval neural networks (LAMSTAR) are fast deep learning neural networks of many layers which can use many filters simultaneously. These filters may be nonlinear, stochastic, logic, non-stationary, or even non-analytical. They are biologically motivated and continuously learning.

A LAMSTAR neural network may serve as a dynamic neural network in spatial or time domain or both. Its speed is provided by Hebbian link-weights (Chapter 9 of in D. Graupe, 2013, which serve to integrate the various and usually different filters (preprocessing functions) into its many layers and to dynamically rank the significance of the various layers and functions relative to a given task for deep learning. This grossly imitates biological learning which integrates outputs various preprocessors (cochlea, retina, etc.) and cortexes (auditory, visual, etc.) and their various regions. Its deep learning capability is further enhanced by using inhibition, correlation and by its ability to cope with incomplete data, or “lost” neurons or layers even at the midst of a task. Furthermore, it is fully transparent due to its link weights. The link-weights also allow dynamic determination of innovation and redundancy, and facilitate the ranking of layers, of filters or of individual neurons relative to a task.

LAMSTAR has been applied to many medical and financial predictions (see Graupe, 2013 ] Section 9C), adaptive filtering of noisy speech in unknown noise, [152] still-image recognition (Graupe, 2013 Section 9D), video image recognition, software security, [156] adaptive control of non-linear systems, and others. LAMSTAR had a much faster computing speed and somewhat lower error than a convolutional neural network based on ReLU-function filters and max pooling, in a comparative character recognition study.

These applications demonstrate delving into aspects of the data that are hidden from shallow learning networks or even from the human senses (eye, ear), such as in the cases of predicting onset of sleep apnea events, [149] of an electrocardiogram of a fetus as recorded from skin-surface electrodes placed on the mother’s abdomen early in pregnancy, [150] of financial prediction (Section 9C in Graupe, 2013), or in blind filtering of noisy speech.

LAMSTAR was proposed in 1996 (A U.S. Patent 5,920,852 A) and was further developed by D Graupe and H Kordylewski 1997-2002. A modified version, known as LAMSTAR 2, was developed by N C Schneider and D Graupe in 2008.

A deep Boltzmann machine (DBM) is a type of binary pairwise Markov random field (undirected probabilistic graphical model) with multiple layers of hidden random variables. It is a network of symmetrically coupled stochastic binary units.

Like DBNs, DBMs can learn complex and abstract internal representations of the input in tasks such as object or speech recognition, using limited labeled data to fine-tune the representations built using a large supply of unlabeled sensory input data. However, unlike DBNs and deep convolutional neural networks, they adopt the inference and training procedure in both directions, bottom-up and top-down pass, which allow the DBMs to better unveil the representations of the ambiguous and complex input structures.

However, the speed of DBMs limits their performance and functionality. Because exact maximum likelihood learning is intractable for DBMs, we may perform approximate maximum likelihood learning. Another option is to use mean-field inference to estimate data-dependent expectations, and approximation the expected sufficient statistics of the model by using Markov chain Monte Carlo (MCMC). This approximate inference, which must be done for each test input, is about 25 to 50 times slower than a single bottom-up pass in DBMs. This makes the joint optimization impractical for large data sets, and seriously restricts the use of DBMs for tasks such as feature representation.

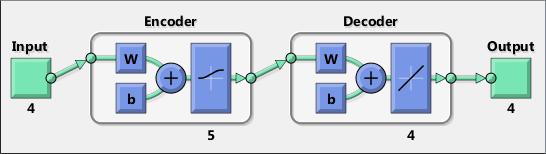

An encoder–decoder framework is a framework based on neural networks that aims to map highly structured input to highly structured output. It was proposed recently in the context of machine translation where the input and output are written sentences in two natural languages. In that work, an LSTM recurrent neural network (RNN) or convolutional neural network (CNN) was used as an encoder to summarize a source sentence, and the summary was decoded using a conditional recurrent neural network language model to produce the translation. All these systems have the same building blocks: gated RNNs and CNNs, and trained attention mechanisms.

Deep ñearning is used in various facets of science. The most common applications are the following:

Automatic speech recognition

Image recognition

Natural language processing

Drug discovery and toxicology

Customer relationship management

Recommendation systems

Biomedical informatics

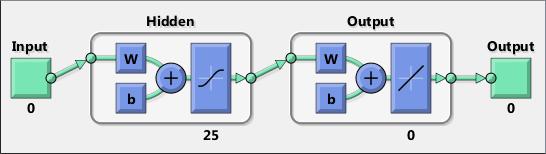

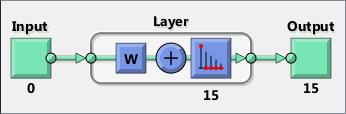

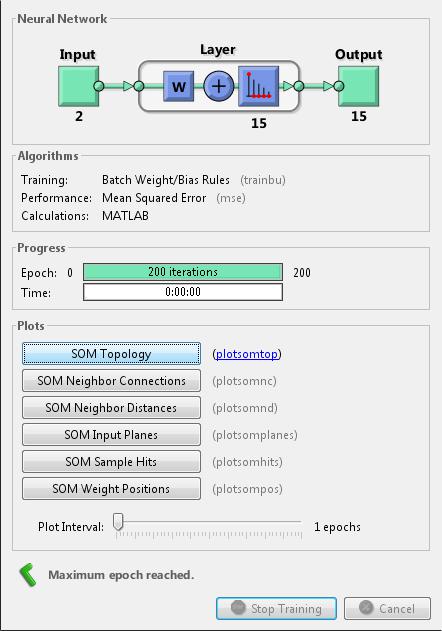

MATLAB has the tool Neural Network Toolbox (Deep Learning Toolbox from version 18) that provides algorithms, functions, and apps to create, train, visualize, and simulate neural networks. You can perform classification, regression, clustering, dimensionality reduction, time-series forecasting, and dynamic system modeling and control.

The toolbox includes convolutional neural network and autoencoder deep learning algorithms for image classification and feature learning tasks. To speed up training of large data sets, you can distribute computations and data across multicore processors, GPUs, and computer clusters using Parallel Computing Toolbox.

The more important features are the following:

Deep learning, including convolutional neural networks and autoencoders

Parallel computing and GPU support for accelerating training (with Parallel Computing Toolbox)

Supervised learning algorithms, including multilayer, radial basis, learning vector quantization (LVQ), time-delay, nonlinear autoregressive (NARX), and recurrent neural network (RNN)

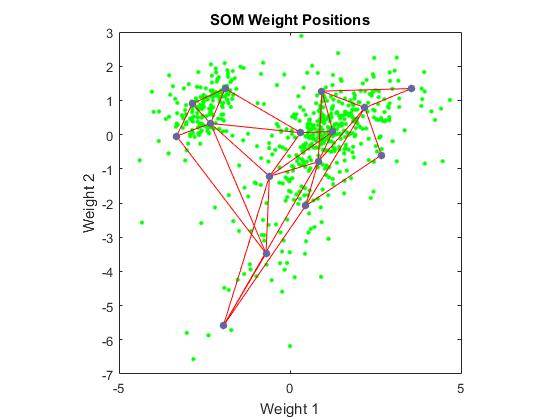

Unsupervised learning algorithms, including self-organizing maps and competitive layers

Apps for data-fitting, pattern recognition, and clustering

Preprocessing, postprocessing, and network visualization for improving training efficiency and assessing network performance

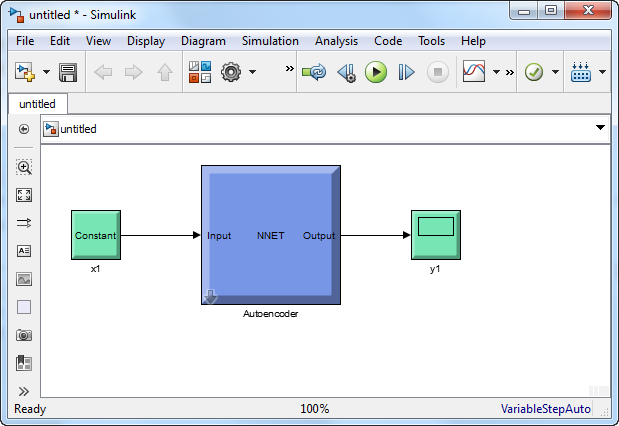

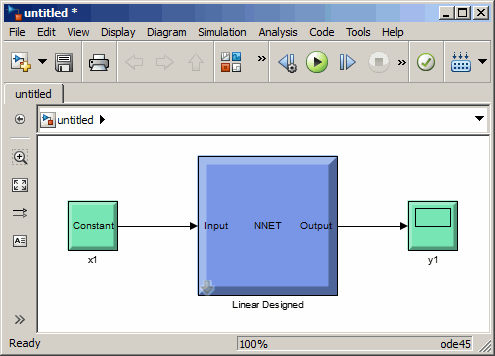

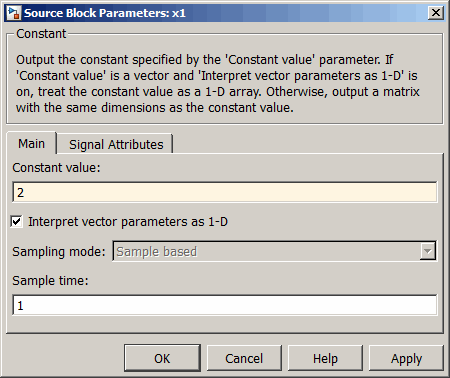

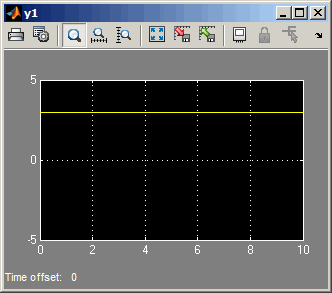

Simulink® blocks for building and evaluating neural networks and for control systems applications

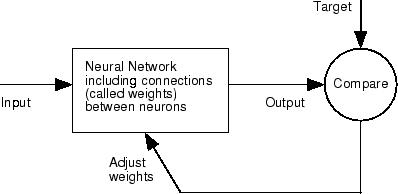

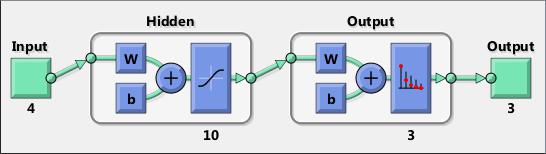

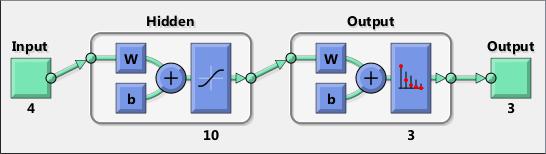

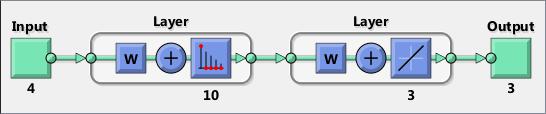

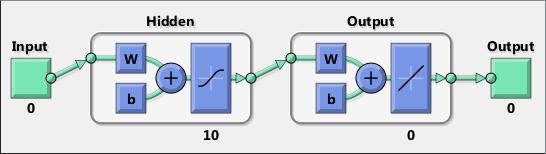

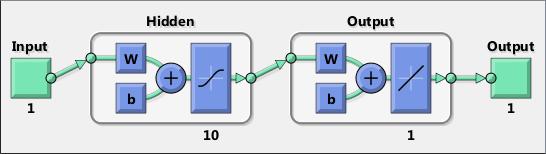

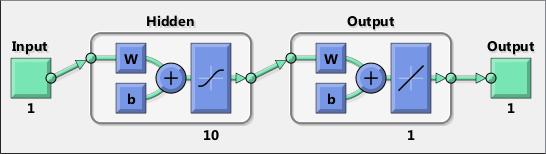

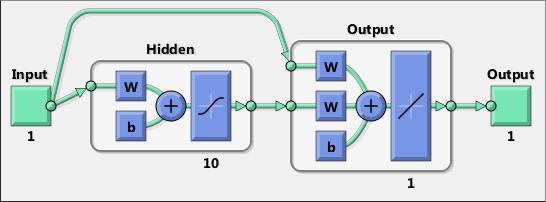

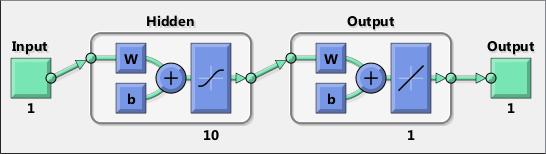

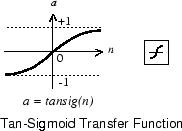

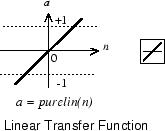

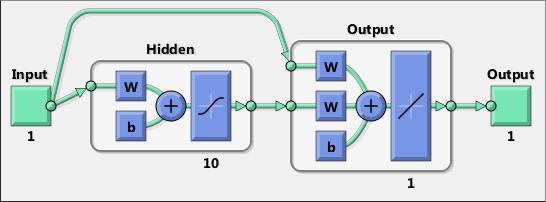

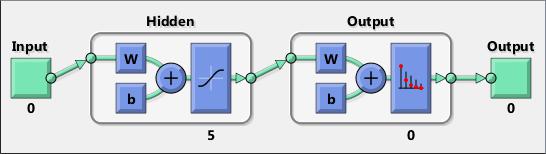

Neural networks are composed of simple elements operating in parallel. These elements are inspired by biological nervous systems. As in nature, the connections between elements largely determine the network function. You can train a neural network to perform a particular function by adjusting the values of the connections (weights) between elements.

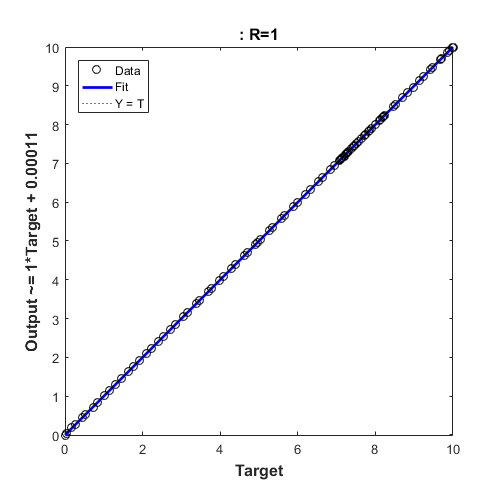

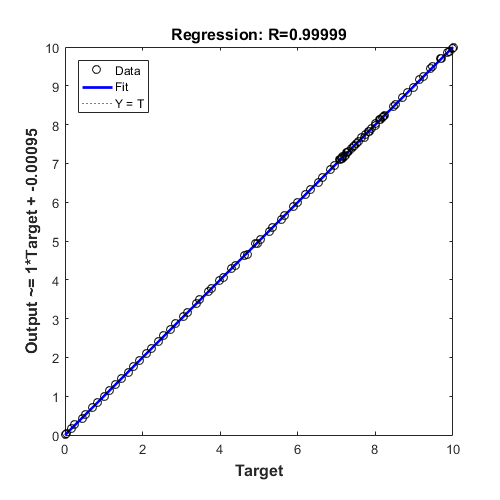

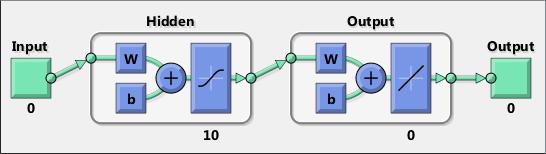

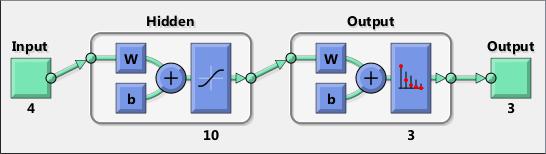

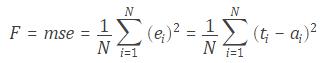

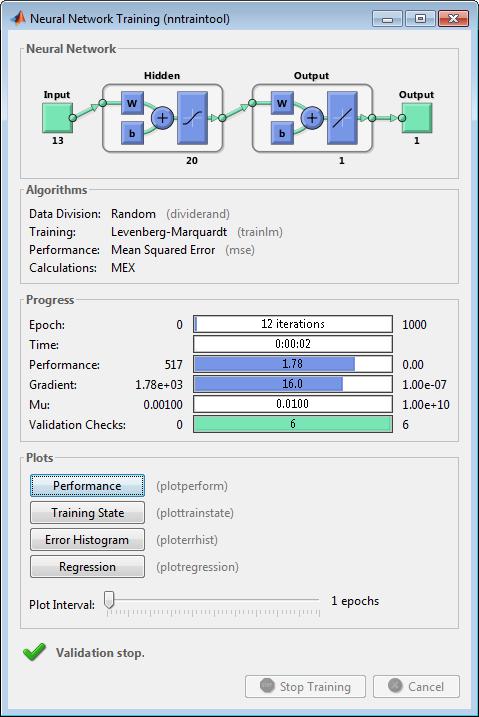

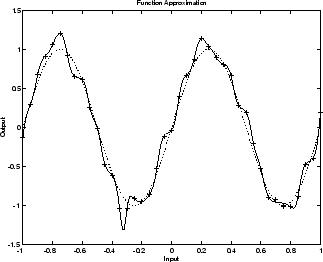

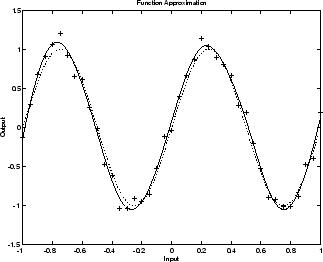

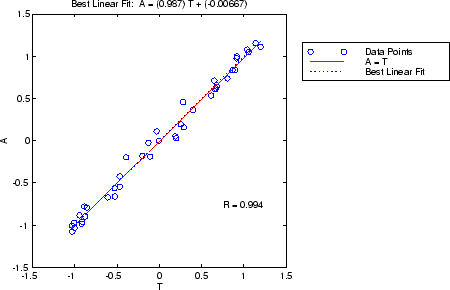

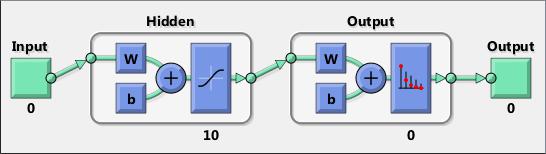

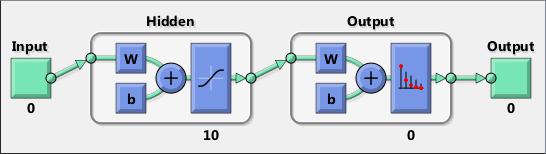

Typically, neural networks are adjusted, or trained, so that a particular input leads to a specific target output. The next figure illustrates such a situation. Here, the network is adjusted, based on a comparison of the output and the target, until the network output matches the target. Typically, many such input/target pairs are needed to train a network.

Neural networks have been trained to perform complex functions in various fields, including pattern recognition, identification, classification, speech, vision, and control systems.

Neural networks can also be trained to solve problems that are difficult for conventional computers or human beings. The toolbox emphasizes the use of neural network paradigms that build up to—or are themselves used in— engineering, financial, and other practical applications.

There are four ways you can use the Deep Learning Toolbox software.

The first way is through its tools. You can open any of these tools from a master tool started by the command nnstart . These tools provide a convenient way to access the capabilities of the toolbox for the following tasks:

Function fitting ( nftool )

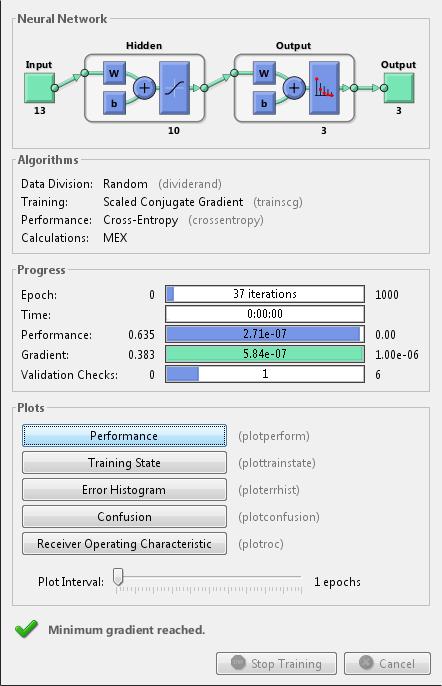

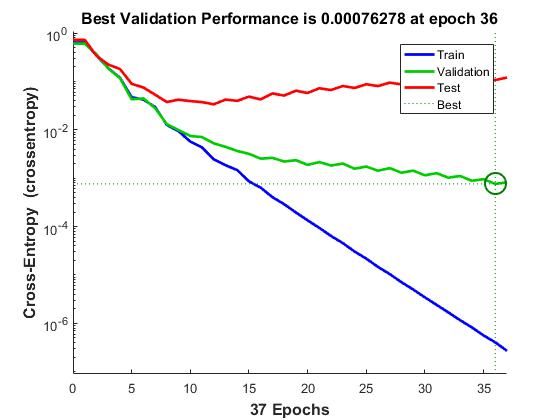

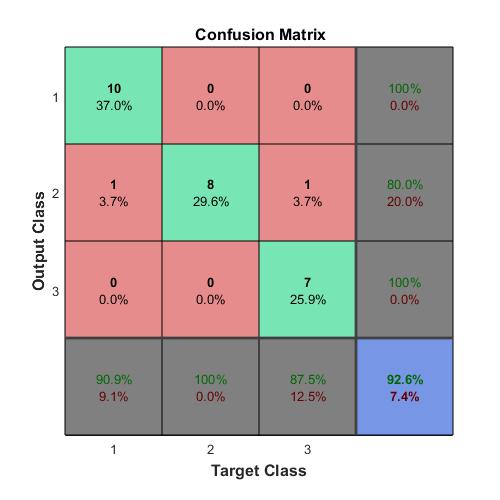

Pattern recognition ( nprtool )

Data clustering ( nctool )

Time-series analysis ( ntstool )

The second way to use the toolbox is through basic command-line operations. The command-line operations offer more flexibility than the tools, but with some added complexity. If this is your first experience with the toolbox, the tools provide the best introduction. In addition, the tools can generate scripts of documented MATLAB code to provide you with templates for creating your own customized command-line functions. The process of using the tools first, and then generating and modifying MATLAB scripts, is an excellent way to learn about the functionality of the toolbox.

The third way to use the toolbox is through customization. This advanced capability allows you to create your own custom neural networks, while still having access to the full functionality of the toolbox. You can create networks with arbitrary connections, and you still be able to train them using existing toolbox training functions (as long as the network components are differentiable).

The fourth way to use the toolbox is through the ability to modify any of the functions contained in the toolbox. Every computational component is written in MATLAB code and is fully accessible.

These four levels of toolbox usage span the novice to the expert: simple tools guide the new user through specific applications, and network customization allows researchers to try novel architectures with minimal effort. Whatever your level of neural network and MATLAB knowledge, there are toolbox features to suit your needs.

The tools themselves form an important part of the learning process for the Neural Network Toolbox software. They guide you through the process of designing neural networks to solve problems in four important application areas, without requiring any background in neural networks or sophistication in using MATLAB. In addition, the tools can automatically generate both simple and advanced MATLAB scripts that can reproduce the steps performed by the tool, but with the option to override default settings. These scripts can provide you with templates for creating customized code, and they can aid you in becoming familiar with the command-line functionality of the toolbox. It is highly recommended that you use the automatic script generation facility of these tools.

It would be impossible to cover the total range of applications for which neural networks have provided outstanding solutions. The remaining sections of this topic describe only a few of the applications in function fitting, pattern recognition, clustering, and time-series analysis. The following table provides an idea of the diversity of applications for which neural networks provide state-of-the-art solutions.

The standard steps for designing neural networks to solve problems are the following:

Collect data

Create the network

Configure the network

Initialize the weights and biases

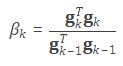

Train the network

Validate the network

Use the network

There are four typical neural networks application areas: function fitting, pattern recognition, clustering, and time-series analysis.

DEEP LEARNING WITH MATLAB: CONVOLUTIONAL Neural NetworkS. FUNCTIONS

Convolution neural networks (CNNs or ConvNets) are essential tools for deep learning, and are especially suited for image recognition. You can construct a CNN architecture, train a network, and use the trained network to predict class labels. You can also extract features from a pre-trained network, and use these features to train a linear classifier. Neural Network Toolbox also enables you to perform transfer learning; that is, retrain the last fully connected layer of an existing CNN on new data.

MATLAB has the following functions:

Syntax

inputlayer = imageInputLayer(inputSize)

inputlayer = imageInputLayer(inputSize,Name,Value)

Description

inputlayer = imageInputLayer( inputSize ) returns an image input layer.

inputlayer = imageInputLayer( inputSize , Name,Value ) returns an image input layer, with additional options specified by one or more Name,Value pair arguments. For example, you can specify a name for the layer.

Examples: Create Image Input Layer

Create an image input layer for 28-by-28 color images. Specify that the software flips the images from left to right at training time with a probability of 0.5.

inputlayer = imageInputLayer([28 28 3],‘DataAugmentation’,‘randfliplr’)

inputlayer =

ImageInputLayer with properties:

Name: ’’

InputSize: [28 28 3]

DataAugmentation: ‘randfliplr’

Normalization: ‘zerocenter’

Input Arguments

inputSize — Size of input data

row vector of two or three integer numbers

Size of the input data, specified as a row vector of two integer numbers corresponding to [height,width] or three integer numbers corresponding to [height,width,channels] .

If the inputSize is a vector of two numbers, then the software sets the channel size to 1.

Example: [200,200,3]

Data Types: single | double

Name-Value Pair Arguments

Specify optional comma-separated pairs of Name,Value arguments. Name is the argument name and Value is the corresponding value. Name must appear inside single quotes ( ’ ’ ). You can specify several name and value pair arguments in any order as Name1,Value1,…,NameN,ValueN .

Example: ‘DataAugmentation’,‘randcrop’,‘Normalization’,‘none’,‘Name’,‘input’ specifies that the software takes a random crop of the image at training time, does not normalize the data, and assigns the name of the layer as input .

‘DataAugmentation’ — Data augmentation transforms

‘none’ (default) | ‘randcrop’ | ‘randfliplr’ | cell array of ‘randcrop’ and ‘randfliplr’

Data augmentation transforms to use during training, specified as the comma-separated pair consisting of ‘DataAugmentation’ and one of the following.

‘none’ — No data augmentation

‘randcrop’ — Take a random crop from the training image. The random crop has the same size as the inputSize .

‘randfliplr’ — Randomly flip the input images from left to right with a 50% chance in the vertical axis.

Cell array of ‘randcrop’ and ‘randfliplr’ . The software applies the augmentation in the order specified in the cell array.

Augmentation of image data is another way of reducing overfitting

Example: ‘DataAugmentation’,{‘randfliplr’,‘randcrop’}

Data Types: char | cell

‘Normalization’ — Data transformation

‘zerocenter’ (default) | ‘none’

Data transformation to apply every time data is forward-propagated through the input layer, specified as the comma-separated pair consisting of ‘Normalization’ and one of the following.

‘zerocenter’ — The software subtracts its mean from the training set.

‘none’ — No transformation.

Example: ‘Normalization’,‘none’

Data Types: char

‘Name’ — Name for the layer

’’ (default) | character vector

Name for the layer, specified as the comma-separated pair consisting of Name and a character vector.

Example: ‘Name’,‘inputlayer’

Data Types: char

Output Arguments

inputlayer — Input layer for the image data

ImageInputLayer object

Input layer for the image data, returned as an ImageInputLayer object.

For information on concatenating layers to construct convolutional neural network architecture, see Layer .

Syntax

convlayer = convolution2dLayer(filterSize,numFilters)

convlayer = convolution2dLayer(filterSize,numFilters,Name,Value)

Description

convlayer = convolution2dLayer( filterSize , numFilters ) returns a layer for 2-D convolution .

convlayer = convolution2dLayer( filterSize , numFilters , Name,Value ) returns the convolutional layer, with additional options specified by one or more Name,Value pair arguments.

Examples:

Create convolutional layer

Create a convolutional layer with 96 filters, each with a height and width of 11. Use a stride (step size) of 4 in the horizontal and vertical directions.

convlayer = convolution2dLayer(11,96,‘Stride’,4);

Specify Initial Weight and Biases in Convolutional Layer

Create a convolutional layer with 32 filters, each with a height and width of 5. Pad the input image with 2 pixels along its border. Set the learning rate factor for the bias to 2. Manually initialize the weights from a Gaussian distribution with a standard deviation of 0.0001.

layer = convolution2dLayer(5,32,‘Padding’,2,‘BiasLearnRateFactor’,2);

Suppose the input has color images. Manually initialize the weights from a Gaussian distribution with standard deviation of 0.0001.

layer.Weights = randn([5 5 3 32])*0.0001;

The size of the local regions in the layer is 5-by-5. The number of color channels for each region is 3. The number of feature maps is 32 (the number of filters). Therefore, there are 553*32 weights in the layer.

randn([5 5 3 32]) returns a 5-by-5-by-3-by-32 array of values from a Gaussian distribution with a mean of 0 and a standard deviation of 1. Multiplying the values by 0.0001 sets the standard deviation of the Gaussian distribution equal to 0.0001.

Similarly, initialize the biases from a Gaussian distribution with a mean of 1 and a standard deviation of 0.00001.

layer.Bias = randn([1 1 32])*0.00001+1;

There are 32 feature maps, and therefore 32 biases. randn([1 1 32]) returns a 1-by-1-by-32 array of values from a Gaussian distribution with a mean of 0 and a standard deviation of 1. Multiplying the values by 0.00001 sets the standard deviation of values equal to 0.00001, and adding 1 sets the mean of the Gaussian distribution equal to 1.

Convolution That Fully Covers the Input Image

Suppose the size of the input image is 28-by-28-1. Create a convolutional layer with 16 filters that have a height of 6 and a width of 4, that traverses the image with a stride of 4 both horizontally and vertically. Make sure the convolution covers the images completely.

For the convolution to fully cover the input image, both the horizontal and vertical output dimensions must be integer numbers. For the horizontal output dimension to be an integer, one row zero padding is required on the top and bottom of the image: (28 – 6+ 21)/4 + 1 = 7. For the vertical output dimension to be an integer, no zero padding is required: (28 – 4+ 20)/4 + 1 = 7. Construct the convolutional layer as follows:

convlayer = convolution2dLayer([6 4],16,‘Stride’,4,‘Padding’,[1 0]);

Input Arguments

filterSize — height and width of filters integer value | vector of two integer v alues

Height and width of the filters, specified as an integer value or a vector of two integer values. filterSize defines the size of the local regions to which the neurons connect in the input.

If filterSize is a scalar value, then the filters have the same height and width.

If filterSize is a vector, then it must be of the form [ h w ], where h is the height and w is the width.

Example: [5,5]

Data Types: single | doublé

numFilters — Number of filters integer value

Number of filters, specified as an integer value. numFilters represents the number of neurons in the convolutional layer that connect to the same region in the input. This parameter determines the number of channels (feature maps) in the output of the convolutional layer.

Data Types: single | doublé

Output Arguments

convlayer — 2-D convolutional layer Convolution2DLayer object

2-D convolutional layer for convolutional neural networks, returned as a Convolution2DLayer object.

Convolutional Layer

A convolutional layer consists of neurons that connect to small regions of the input or the layer before it. These regions are called filters . You can specify the size of these regions using the filterSize input argument.

For each region, the software computes a dot product of the weights and the input, and then adds a bias term. The filter then moves along the input vertically and horizontally, repeating the same computation for each region, i.e., convolving the input. The step size with which it moves is called a stride . You can specify this step size with the Stride name-value pair argument. These local regions that the neurons connect to might overlap depending on the filterSize and Stride .

The number of weights used for a filter is h * w * c , where h is the height, and w is the width of the filter size, and c is the number of channels in the input (for example, if the input is a color image, the number of channels is three). As a filter moves along the input, it uses the same set of weights and bias for the convolution, forming a feature map. The convolution layer usually has multiple feature maps, each with a different set of weights and a bias. The number of feature maps is determined by the number of filters.

The total number of parameters in a convolutional layer is (( h * w * c + 1)* Number of Filters ), where 1 is for the bias.

The output height and width of the convolutional layer is ( Input Size – Filter Size + 2* Padding )/ Stride + 1. This value must be an integer for the whole image to be fully covered. If the combination of these parameters does not lead the image to be fully covered, the software by default ignores the remaining part of the image along the right and bottom edge in the convolution.

The total number of neurons in a feature map, say Map Size , is the product of the output height and width. The total number of neurons (output size) in a convolutional layer, then, is Map Size * Number of Filters .

For example, suppose that the input image is a 28-by-28-by-3 color image. For a convolutional layer with 16 filters, and a filter size of 8-by-8, the number of weights per filter is 883 = 192, and the total number of parameters in the layer is (192+1) * 16 = 3088. Assuming stride is 4 in each direction, the total number of neurons in each feature map is 6-by-6 ((28 – 8+0)/4 + 1 = 6). Then, the total number of neurons in the layer is 6616 = 256. Usually, the results from these neurons pass through some form of nonlinearity, such as rectified linear units (ReLU).

Syntax

layer = reluLayer()

layer = reluLayer(Name,Value)

Description

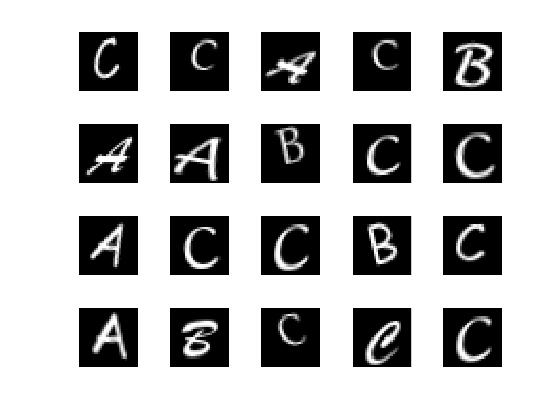

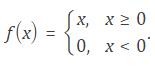

layer = reluLayer() returns a rectified linear unit (ReLU) layer. It performs a threshold operation to each element, where any input value less than zero is set to zero, i.e.,

The ReLU layer does not change the size of its input.

layer = reluLayer( Name,Value ) returns a ReLU layer, with the additional option specified by the Name,Value pair argument.

Examples

Create a rectified linear unit layer with the name relu1 .

layer = reluLayer(‘Name’,‘relu1’);

Syntax

localnormlayer = crossChannelNormalizationLayer(windowChannelSize)

localnormlayer = crossChannelNormalizationLayer(windowChannelSize,Name,Value)

Description

localnormlayer = crossChannelNormalizationLayer( windowChannelSize ) returns a local response normalization layer, which carries out channel-wise normalization [1] .

localnormlayer = crossChannelNormalizationLayer( windowChannelSize , Name,Value ) returns a local response normalization layer, with additional options specified by one or more Name,Value pair arguments.

Examples

Create a local response normalization layer for channel-wise normalization, where a window of five channels will be used to normalize each element, and the additive constant for the normalizer ( K ) is 1.

localnormlayer = crossChannelNormalizationLayer(5,‘K’,1);

Syntax

avgpoollayer = averagePooling2dLayer(poolSize)

avgpoollayer = averagePooling2dLayer(poolSize,Name,Value)

Description

avgpoollayer = averagePooling2dLayer( poolSize ) returns a layer that performs average pooling , dividing the input into rectangular regions and computing the average of each region. poolSize specifies the dimensions of the rectangular region.

avgpoollayer = averagePooling2dLayer( poolSize , Name,Value ) returns the average pooling layer, with additional options specified by one or more Name,Value pair arguments.

Examples:

Average Pooling Layer with Non-Overlapping Pooling Regions

Create an average pooling layer with non-overlapping pooling regions, which down-samples by a factor of 2.

avgpoollayer = averagePooling2dLayer(2,‘Stride’,2);

The height and width of the rectangular region (pool size) are both 2. This layer creates pooling regions of size [2,2] and takes the average of the four elements in each region. Because the stride (step size for moving along the images vertically and horizontally) is also [2,2] the pooling regions do not overlap.

Average Pooling Layer with Overlapping Pooling Regions

Create an average pooling layer with overlapping pooling regions. Also add padding for the top and bottom of the input.

avgpoollayer = averagePooling2dLayer([3,2],‘Stride’,2,‘Padding’,[1 0]);

The height and width of the rectangular region (pool size) are 3 and 2. This layer creates pooling regions of size [3,2] and takes the average of the six elements in each region. Because the stride is [2,2], the pooling regions overlap.

A value of 1 for the Padding name-value pair indicates that software also adds a row of zeros to the top and bottom of the input data. 0 indicates that no padding is added to the right and left of the input data.

Syntax

maxpoollayer = maxPooling2dLayer(poolSize)

maxpoollayer = maxPooling2dLayer(poolSize,Name,Value)

Description

maxpoollayer = maxPooling2dLayer( poolSize ) returns a layer that performs max pooling , dividing the input into rectangular regions and returning the maximum value of each region. poolSize specifies the dimensions of a pooling region.

maxpoollayer = maxPooling2dLayer( poolSize , Name,Value ) returns the max pooling layer, with additional options specified by one or more Name,Value pair arguments.

Examples:

Max Pooling Layer with Non-Overlapping Pooling Regions

Create a max pooling layer with non-overlapping pooling regions, which down-samples by a factor of 2.

maxpoollayer = maxPooling2dLayer(2,‘Stride’,2);

The height and width of the rectangular region (pool size) are both 2. This layer creates pooling regions of size [2,2] and returns the maximum of the four elements in each region. Because the stride (step size for moving along the images vertically and horizontally) is also [2,2], the pooling regions do not overlap.

Max Pooling Layer with Overlapping Pooling Regions

Create a max pooling layer with overlapping pooling regions. Also add padding for the top and bottom of the input.

maxpoollayer = maxPooling2dLayer([3,2],‘Stride’,2,‘Padding’,[1 0]);

The height and width of the rectangular region (pool size) are 3 and 2. This layer creates pooling regions of size [3,2] and returns the maximum of the six elements in each region. Because the stride (step size for moving along the images vertically and horizontally) is [2,2], the pooling regions overlap.

The value 1 for the Padding name-value pair indicates that the software adds a row of zeros to the top and bottom of the input data. 0 indicates that no padding is added to the right and left of the input data.

Syntax

fullconnectlayer = fullyConnectedLayer(outputSize)

fullconnectlayer = fullyConnectedLayer(outputSize,Name,Value)

Description

fullconnectlayer = fullyConnectedLayer( outputSize ) returns a fully connected layer, in which the software multiplies the input by a weight matrix and then adds a bias vector.

fullconnectlayer = fullyConnectedLayer( outputSize , Name,Value ) returns a fully connected layer with additional options specified by one or more Name,Value pair arguments.

Examples:

Create Fully Connected Layer

Create a fully connected layer with an output size of 10.

fullconnectlayer = fullyConnectedLayer(10);

The software determines the input size at training time.

Specify Initial Weight and Biases in Fully Connected Layer

Create a fully connected layer with an output size of 10. Set the learning rate factor for the bias to 2. Manually initialize the weights from a Gaussian distribution with a standard deviation of 0.0001.

layers = [imageInputLayer([28 28 1],‘Normalization’,‘none’);

convolution2dLayer(5,20,‘NumChannels’,1);

reluLayer();

maxPooling2dLayer(2,‘Stride’,2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

To initialize the weights of the fully connected layer, you must know the layer’s input size. The input size is equal to the output size of the preceding max pooling layer, which, in turn, depends on the output size of the convolutional layer.

For one direction in a channel (feature map) of the convolutional layer, the output is ((28 – 5 + 20)/1) +1 = 24. The max pooling layer has nonoverlapping regions, so it down-samples by 2 in each direction, i.e., 24/2 = 12. For one channel of the convolutional layer, the output of the max pooling layer is 12 12 = 144. There are 20 channels in the convolutional layer, so the output of the max pooling layer is 144 * 20 = 2880. This is the size of the input to the fully connected layer.

The formula for overlapping regions gives the same result: For one direction of a channel, the output is (((24 – 2 +0)/2) + 1 = 12. For one channel, the output is 144, and for all 20 channels in the convolutional layer, the output of the max pooling layer is 2880.

Initialize the weights of the fully connected layer from a Gaussian distribution with a mean of 0 and a standard deviation of 0.0001.

layers(5).Weights = randn([10 2880])*0.0001;

randn([10 2880]) returns a 10-by-2880 matrix of values from a Gaussian distribution with mean 0 and standard deviation 1. Multiplying the values by 0.0001 sets the standard deviation of the Gaussian distribution equal to 0.0001.

Similarly, initialize the biases from a Gaussian distribution with a mean of 1 and a standard deviation of 0.0001.

layers(5).Bias = randn([10 1])*0.0001+1;

The size of the bias vector is equal to the output size of the fully connected layer, which is 10. randn([10 1]) returns a 10-by-1 vector of values from a Gaussian distribution with a mean of 0 and a standard deviation of 1. Multiplying the values by 0.00001 sets the standard deviation of values equal to 0.00001, and adding 1 sets the mean of the Gaussian distribution equal to 1.

Syntax

droplayer = dropoutLayer()

droplayer = dropoutLayer(probability)

droplayer = dropoutLayer(___,Name,Value)

Description

droplayer = dropoutLayer() returns a dropout layer , which randomly sets input elements to zero with a probability of 0.5. Dropout layer only works at training time.

droplayer = dropoutLayer( probability ) returns a dropout layer, which randomly sets input elements to zero with a probability specified by probability .

droplayer = dropoutLayer( ___ , Name,Value ) returns the dropout layer, with the additional option specified by the Name,Value pair argument.

Examples

Create a dropout layer, which randomly sets about 40% of the input to zero. Assign the name of the layer as dropout1 .

droplayer = dropoutLayer(0.4,‘Name’,‘dropout1’);

Syntax

smlayer = softmaxLayer()

smlayer = softmaxLayer(Name,Value)

Description

smlayer = softmaxLayer() returns a softmax layer for classification problems. The softmax layer uses the softmax activation function.

smlayer = softmaxLayer( Name,Value ) returns a softmax layer, with the additional option specified by the Name,Value pair argument.

Examples

Create Softmax Layer with Specified Name

Create a softmax layer with the name sml1 .

smlayer = softmaxLayer(‘Name’,‘sml1’);

Syntax

coutputlayer = classificationLayer()

coutputlayer = classificationLayer(Name,Value)

Description

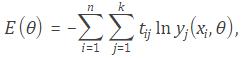

coutputlayer = classificationLayer() returns a classification output layer for a neural network. The classification output layer holds the name of the loss function that the software uses for training the network for multi-class classification, the size of the output, and the class labels.

coutputlayer = classificationLayer( Name,Value ) returns the classification output layer, with the additional option specified by the Name,Value pair argument.

Examples

Create a classification output layer with the name ‘coutput’ .

coutputlayer = classificationLayer(‘Name’,‘coutput’)

coutputlayer =

ClassificationOutputLayer with properties:

OutputSize: ‘auto’

LossFunction: ‘crossentropyex’

ClassNames: {}

Name: ‘coutput’

The software determines the output layer automatically during training. The default loss function for classification is cross entropy for k mutually exclusive classes.

MATLAB has the following functions:

Syntax

trainedNet = trainNetwork(imds,layers,options)

trainedNet = trainNetwork(X,Y,layers,options)

[trainedNet,traininfo] = trainNetwork( ___ )

Description

NOTE: trainNetwork requires the Parallel Computing Toolbox and a CUDA ® -enabled NVIDIA ® GPU with compute capability 3.0 or higher.

trainedNet = trainNetwork( imds , layers , options ) returns a trained network defined by the convolutional neural network (ConvNet) architecture, layers , for the input image data, imds , using the training options, options .

trainedNet = trainNetwork( X , Y , layers , options ) returns a trained network for the predictors in X and responses in Y .

[ trainedNet , traininfo ] = trainNetwork( ___ ) also returns information on the training for any of the above input arguments.

Examples:

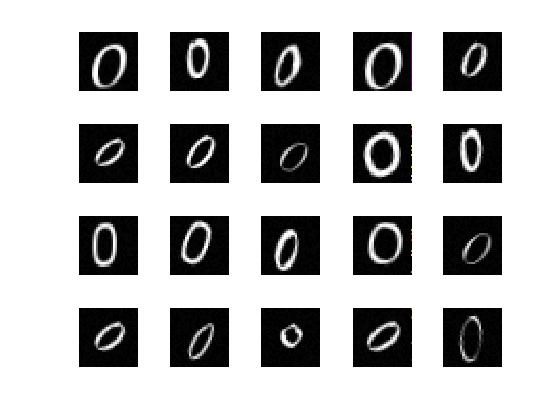

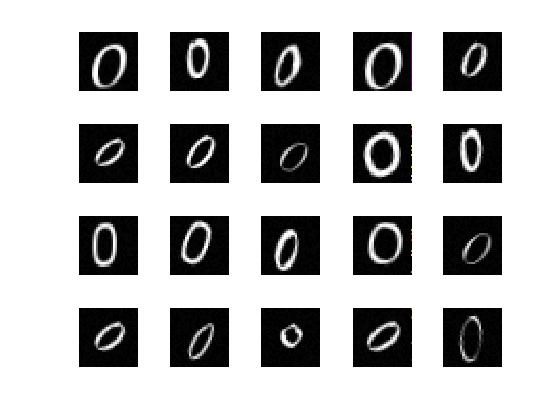

Train a Convolutional Neural Network Using Data in ImageDatastore

Load the sample data as an ImageDatastore object.

digitDatasetPath = fullfile(matlabroot,‘toolbox’,‘nnet’,‘nndemos’,…

‘nndatasets’,‘DigitDataset’);

digitData = imageDatastore(digitDatasetPath,…

‘IncludeSubfolders’,true,‘LabelSource’,‘foldernames’);

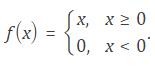

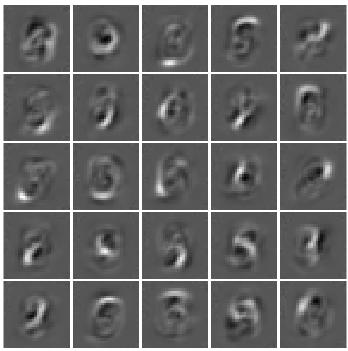

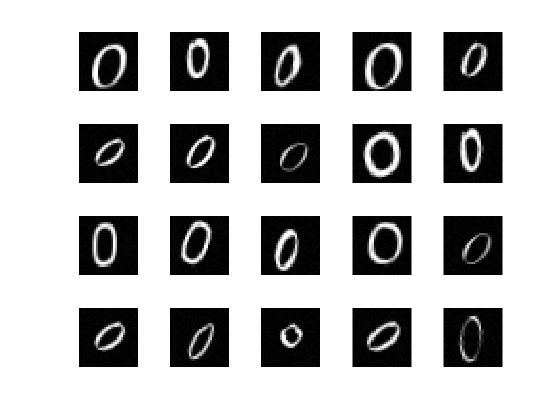

The data store contains 10000 synthetic images of digits 0-9. The images are generated by applying random transformations to digit images created using different fonts. Each digit image is 28-by-28 pixels.

Display some of the images in the datastore.

for i = 1:20

subplot(4,5,i);

imshow(digitData.Files{i});

end

Check the number of images in each digit category.

digitData.countEachLabel

ans =

Label Count

_____ _____

0 988

1 1026

2 1003

3 993

4 991

5 1017

6 992

7 999

8 1003

9 988

The data contains an unequal number of images per category.

To balance the number of images for each digit in the training set, first find the minimum number of images in a category.

minSetCount = min(digitData.countEachLabel{:,2})

minSetCount =

988

Divide the dataset so that each category in the training set has 494 images and the testing set has the remaining images from each label.

trainingNumFiles = round(minSetCount/2);

rng(1) % For reproducibility

[trainDigitData,testDigitData] = splitEachLabel(digitData,…

trainingNumFiles,‘randomize’);

splitEachLabel splits the image files in digitData into two new datastores, trainDigitData and testDigitData .

Define the convolutional neural network architecture.

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,20);

reluLayer();

maxPooling2dLayer(2,‘Stride’,2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

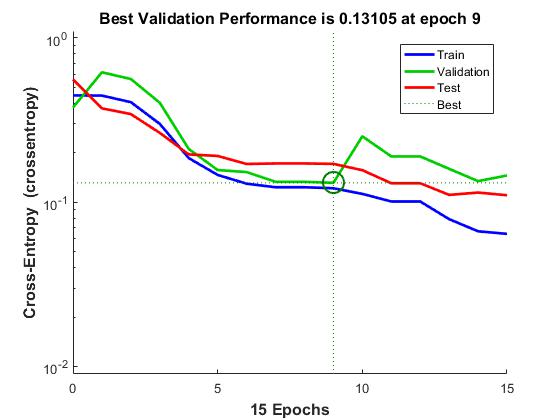

Set the options to default settings for the stochastic gradient descent with momentum. Set the maximum number of epochs at 20, and start the training with an initial learning rate of 0.001.

options = trainingOptions(‘sgdm’,‘MaxEpochs’,20,…

‘InitialLearnRate’,0.001);

Train the network.

convnet = trainNetwork(trainDigitData,layers,options);

|=========================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning|

| | | (seconds) | Loss | Accuracy | Rate |

|=========================================================================================|

| 2 | 50 | 0.72 | 0.2232 | 92.97% | 0.001000 |

| 3 | 100 | 1.37 | 0.0182 | 99.22% | 0.001000 |

| 4 | 150 | 1.99 | 0.0141 | 100.00% | 0.001000 |

| 6 | 200 | 2.64 | 0.0023 | 100.00% | 0.001000 |

| 7 | 250 | 3.27 | 0.0004 | 100.00% | 0.001000 |

| 8 | 300 | 3.91 | 0.0001 | 100.00% | 0.001000 |

| 10 | 350 | 4.56 | 0.0002 | 100.00% | 0.001000 |

| 11 | 400 | 5.19 | 0.0003 | 100.00% | 0.001000 |

| 12 | 450 | 5.82 | 0.0001 | 100.00% | 0.001000 |

| 14 | 500 | 6.46 | 0.0001 | 100.00% | 0.001000 |

| 15 | 550 | 7.09 | 0.0001 | 100.00% | 0.001000 |

| 16 | 600 | 7.72 | 0.0001 | 100.00% | 0.001000 |

| 18 | 650 | 8.37 | 0.0001 | 100.00% | 0.001000 |

| 19 | 700 | 9.00 | 0.0001 | 100.00% | 0.001000 |

| 20 | 750 | 9.62 | 0.0001 | 100.00% | 0.001000 |

|=========================================================================================|

Run the trained network on the test set that was not used to train the network and predict the image labels (digits).

YTest = classify(convnet,testDigitData);

TTest = testDigitData.Labels;

Calculate the accuracy.

accuracy = sum(YTest == TTest)/numel(TTest)

accuracy =

0.9984

Accuracy is the ratio of the number of true labels in the test data matching the classifications from classify, to the number of images in the test data. In this case about 99.8% of the digit estimations match the true digit values in the test set.

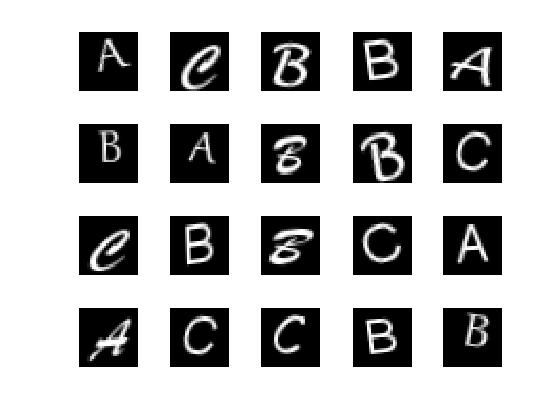

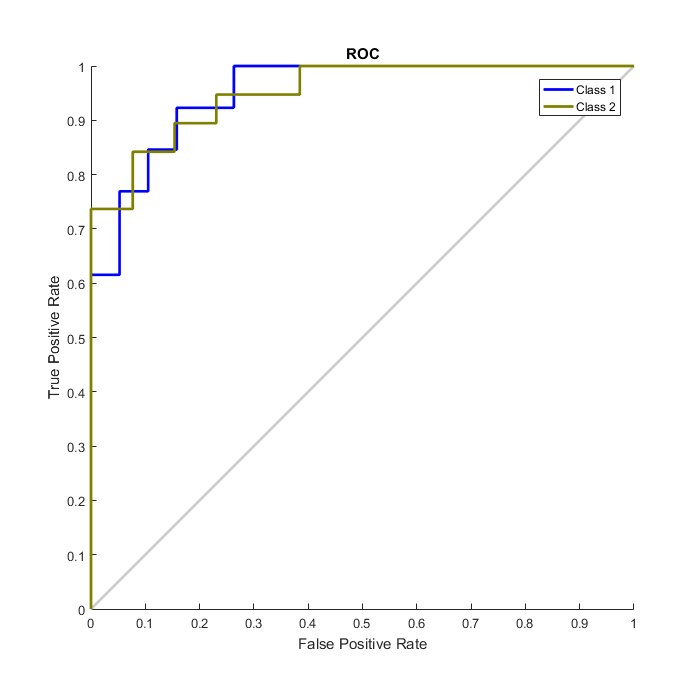

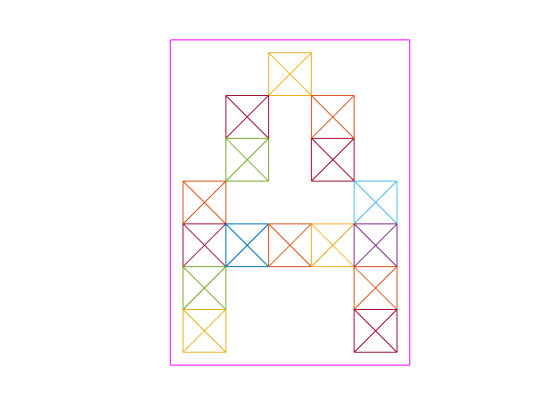

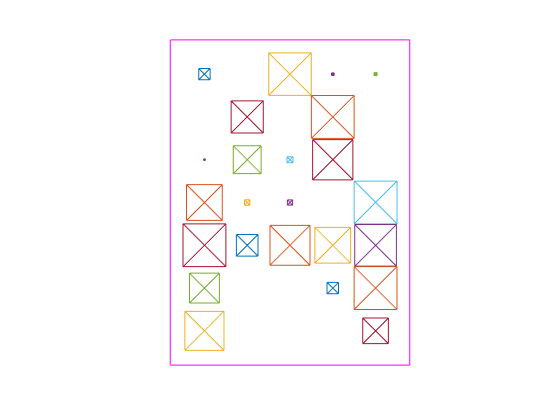

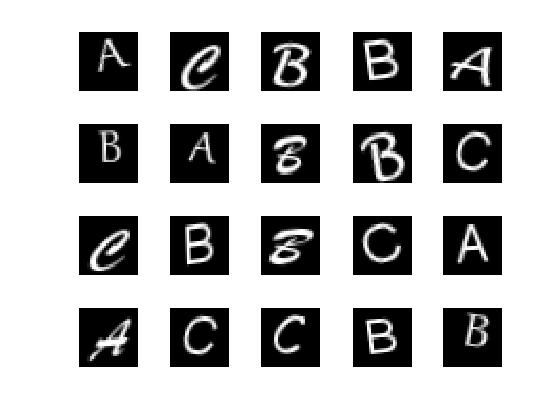

Construct and Train a Convolutional Neural Network

Load the sample data.

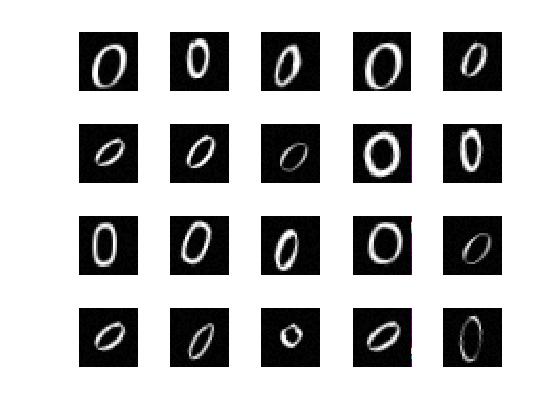

load lettersTrainSet

XTrain contains 1500 28-by-28 grayscale images of the letters A, B, and C in a 4-D array. There is equal numbers of each letter in the data set. TTrain contains the categorical array of the letter labels.

Display some of the letter images.

figure;

for j = 1:20

subplot(4,5,j);

selectImage = datasample(XTrain,1,4);

imshow(selectImage,[]);

end

Define the convolutional neural network architecture.

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,16);

reluLayer();

maxPooling2dLayer(2,‘Stride’,2);

fullyConnectedLayer(3);

softmaxLayer();

classificationLayer()];

Set the options to default settings for the stochastic gradient descent with momentum.

options = trainingOptions(‘sgdm’);

Train the network.

rng(‘default’) % For reproducibility

net = trainNetwork(XTrain,TTrain,layers,options);

|=========================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning|

| | | (seconds) | Loss | Accuracy | Rate |

|=========================================================================================|

| 5 | 50 | 0.50 | 0.2175 | 98.44% | 0.010000 |

| 10 | 100 | 1.01 | 0.0238 | 100.00% | 0.010000 |

| 14 | 150 | 1.52 | 0.0108 | 100.00% | 0.010000 |

| 19 | 200 | 2.03 | 0.0088 | 100.00% | 0.010000 |

| 23 | 250 | 2.53 | 0.0048 | 100.00% | 0.010000 |

| 28 | 300 | 3.04 | 0.0035 | 100.00% | 0.010000 |

|=========================================================================================|

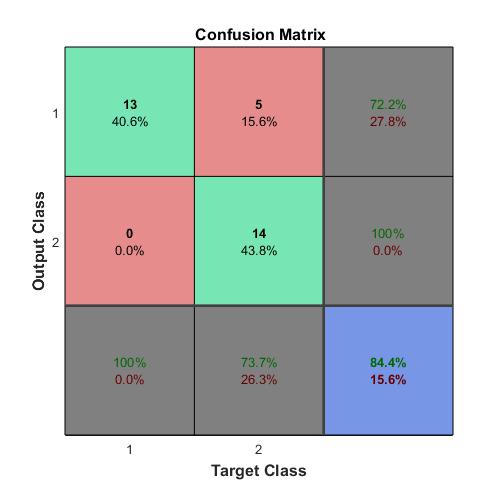

Run the trained network on a test set that was not used to train the network and predict the image labels (letters).

load lettersTestSet;

XTest contains 1500 28-by-28 grayscale images of the letters A, B, and C in a 4-D array. There is again equal numbers of each letter in the data set. TTest contains the categorical array of the letter labels.

YTest = classify(net,XTest);

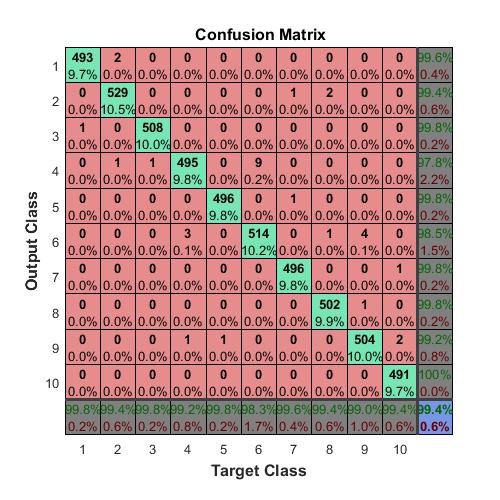

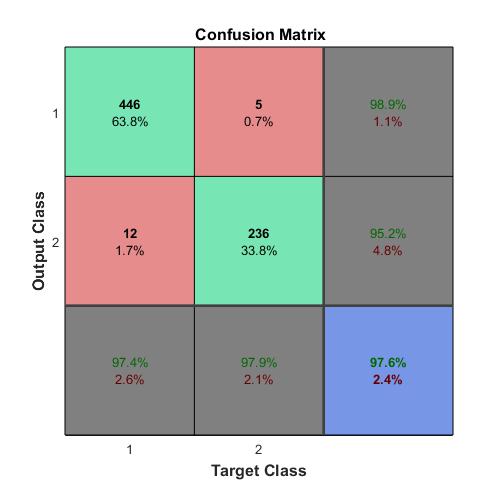

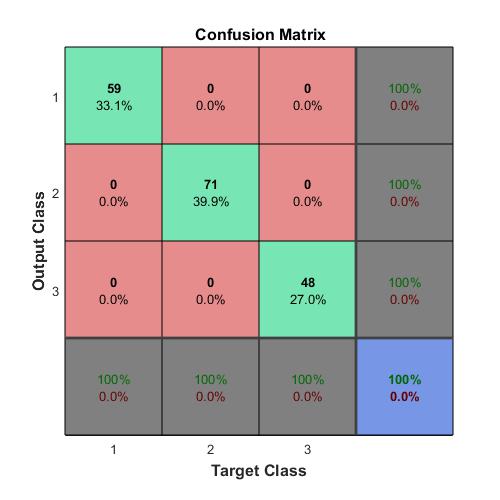

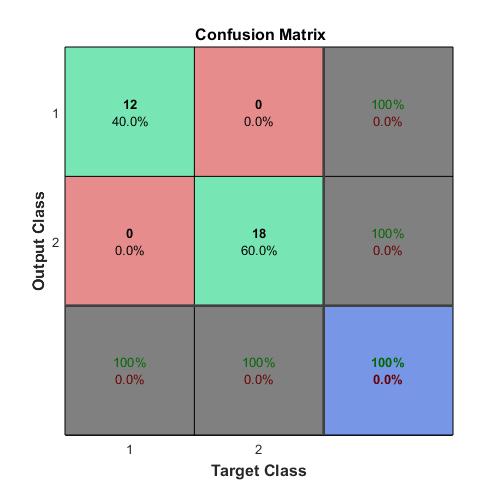

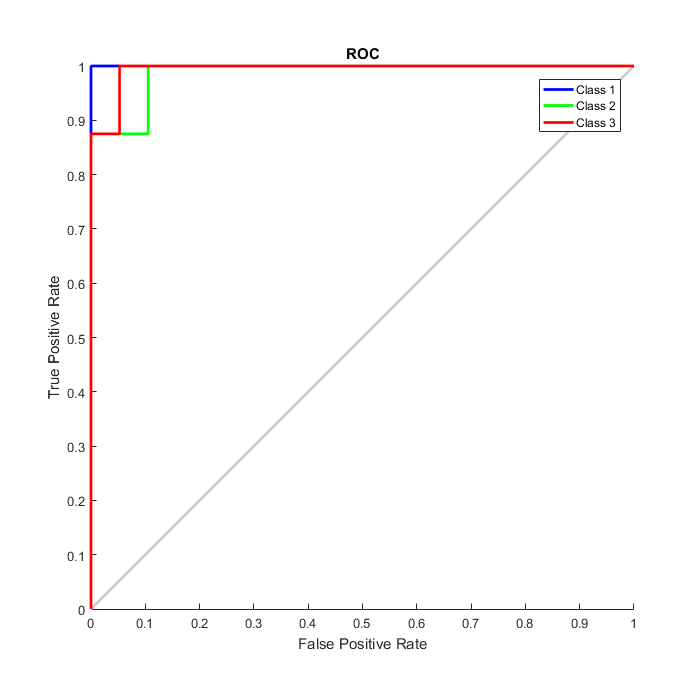

Compute the confusion matrix.

targets(:,1)=(TTest==‘A’);

targets(:,2)=(TTest==‘B’);

targets(:,3)=(TTest==‘C’);

outputs(:,1)=(YTest==‘A’);

outputs(:,2)=(YTest==‘B’);

outputs(:,3)=(YTest==‘C’);

plotconfusion(double(targets’),double(outputs’))

Syntax

options = trainingOptions(solverName)

options = trainingOptions(solverName,Name,Value)

Description

options = trainingOptions( solverName ) returns a set of training options for the solver specified by solverName .

options = trainingOptions( solverName , Name,Value ) returns a set of training options, with additional options specified by one or more Name,Value pair arguments.

Examples

Create a set of options for training a network using stochastic gradient descent with momentum. Reduce the learning rate by a factor of 0.2 every 5 epochs. Set the maximum number of epochs for training at 20, and use a mini-batch with 300 observations at each iteration. Specify a path for saving checkpoint networks after every epoch.

options = trainingOptions(‘sgdm’,…

‘LearnRateSchedule’,‘piecewise’,…

‘LearnRateDropFactor’,0.2,…

‘LearnRateDropPeriod’,5,…

‘MaxEpochs’,20,…

‘MiniBatchSize’,300,…

‘CheckpointPath’,‘C:’);

MATLAB has the following functions:

Syntax

features = activations(net,X,layer)

features = activations(net,X,layer,Name,Value)

Description

features = activations( net , X , layer ) returns network activations for a specific layer using the trained network net and the data in X .

features = activations( net , X , layer , Name,Value ) returns network activations for a specific layer with additional options specified by one or more Name,Value pair arguments.

For example, you can specify the format of the output trainedFeatures .

Example: Extract Features from Trained Convolutional Neural Network

NOTE: Training a convolutional neural network requires Parallel Computing Toolbox™ and a CUDA®-enabled NVIDIA® GPU with compute capability 3.0 or higher.

Load the sample data.

[XTrain,TTrain] = digitTrain4DArrayData;

digitTrain4DArrayData loads the digit training set as 4-D array data. XTrain is a 28-by-28-by-1-by-4940 array, where 28 is the height and 28 is the width of the images. 1 is the number of channels and 4940 is the number of synthetic images of handwritten digits. TTrain is a categorical vector containing the labels for each observation.

Construct the convolutional neural network architecture.

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,20);

reluLayer();

maxPooling2dLayer(2,‘Stride’,2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

Set the options to default settings for the stochastic gradient descent with momentum.

options = trainingOptions(‘sgdm’);

Train the network.

rng(‘default’)

net = trainNetwork(XTrain,TTrain,layers,options);

|=========================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning|

| | | (seconds) | Loss | Accuracy | Rate |

|=========================================================================================|

| 2 | 50 | 0.45 | 2.2301 | 47.66% | 0.010000 |

| 3 | 100 | 0.88 | 0.9880 | 75.00% | 0.010000 |

| 4 | 150 | 1.31 | 0.5558 | 82.03% | 0.010000 |

| 6 | 200 | 1.75 | 0.4022 | 89.06% | 0.010000 |

| 7 | 250 | 2.17 | 0.3750 | 88.28% | 0.010000 |

| 8 | 300 | 2.61 | 0.3368 | 91.41% | 0.010000 |

| 10 | 350 | 3.04 | 0.2589 | 96.09% | 0.010000 |

| 11 | 400 | 3.47 | 0.1396 | 98.44% | 0.010000 |

| 12 | 450 | 3.90 | 0.1802 | 96.09% | 0.010000 |

| 14 | 500 | 4.33 | 0.0892 | 99.22% | 0.010000 |

| 15 | 550 | 4.76 | 0.1221 | 96.88% | 0.010000 |

| 16 | 600 | 5.19 | 0.0961 | 98.44% | 0.010000 |

| 18 | 650 | 5.62 | 0.0856 | 99.22% | 0.010000 |

| 19 | 700 | 6.05 | 0.0651 | 100.00% | 0.010000 |

| 20 | 750 | 6.49 | 0.0582 | 98.44% | 0.010000 |

| 22 | 800 | 6.92 | 0.0808 | 98.44% | 0.010000 |

| 23 | 850 | 7.35 | 0.0521 | 99.22% | 0.010000 |

| 24 | 900 | 7.77 | 0.0248 | 100.00% | 0.010000 |

| 25 | 950 | 8.20 | 0.0241 | 100.00% | 0.010000 |

| 27 | 1000 | 8.63 | 0.0253 | 100.00% | 0.010000 |

| 28 | 1050 | 9.07 | 0.0260 | 100.00% | 0.010000 |

| 29 | 1100 | 9.49 | 0.0246 | 100.00% | 0.010000 |

|=========================================================================================|

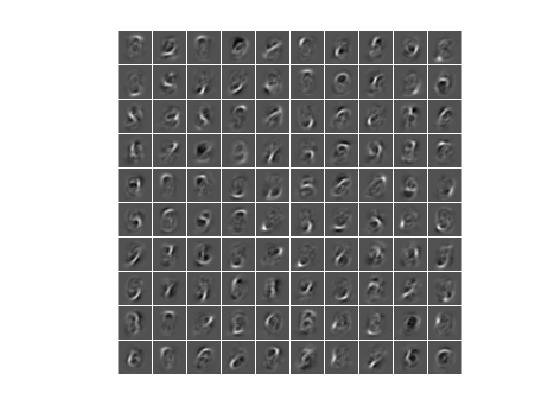

Make predictions, but rather than taking the output from the last layer, specify the second ReLU layer (the sixth layer) as the output layer.

trainFeatures = activations(net,XTrain,6);

These predictions from an inner layer are known as activations or features . activations method, by default, uses a CUDA-enabled GPU with compute ccapability 3.0, when available. You can also choose to run activations on a CPU using the ‘ExecutionEnvironment’,‘cpu’ name-value pair argument.

You can use the returned features to train a support vector machine using the Statistics and Machine Learning Toolbox™ function fitcecoc .

svm = fitcecoc(trainFeatures,TTrain);

Load the test data.

[XTest,TTest]= digitTest4DArrayData;

Extract the features from the same ReLU layer (the sixth layer) for test data and use the returned features to train a support vector machine.

testFeatures = activations(net,XTest,6);

testPredictions = predict(svm,testFeatures);

Plot the confusion matrix. Convert the data into the format plotconfusion accepts

ttest = dummyvar(double(TTest))’; % dummyvar requires Statistics and Machine Learning Toolbox

tpredictions = dummyvar(double(testPredictions))’;

plotconfusion(ttest,tpredictions);

The overall accuracy for the test data using the trained network net is 99.4%.

Manually compute the overall accuracy.

accuracy = sum(TTest == testPredictions)/numel(TTest)

accuracy =

0.9937

Syntax

YPred = predict(net,X)

YPred = predict(net,X,Name,Value)

Description

YPred = predict( net , X ) predicts responses for data in X using the trained network net .

YPred = predict( net , X , Name,Value ) predicts responses with the additional option specified by the Name,Value pair argument.

Examples: Predict Output Scores Using a Trained ConvNet

NOTE: Training a convolutional neural network requires Parallel Computing Toolbox and a CUDA®-enabled NVIDIA® GPU with compute capability 3.0 or higher.

Load the sample data.

[XTrain,TTrain] = digitTrain4DArrayData;

digitTrain4DArrayData loads the digit training set as 4-D array data. XTrain is a 28-by-28-by-1-by-4940 array, where 28 is the height and 28 is the width of the images. 1 is the number of channels and 4940 is the number of synthetic images of handwritten digits. TTrain is a categorical vector containing the labels for each observation.

Construct the convolutional neural network architecture.

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,20);

reluLayer();

maxPooling2dLayer(2,‘Stride’,2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

Set the options to default settings for the stochastic gradient descent with momentum.

options = trainingOptions(‘sgdm’);

Train the network.

rng(1)

net = trainNetwork(XTrain,TTrain,layers,options);

|=========================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning|

| | | (seconds) | Loss | Accuracy | Rate |

|=========================================================================================|

| 2 | 50 | 0.42 | 2.2315 | 51.56% | 0.010000 |

| 3 | 100 | 0.83 | 1.0606 | 68.75% | 0.010000 |

| 4 | 150 | 1.25 | 0.6321 | 82.03% | 0.010000 |

| 6 | 200 | 1.67 | 0.3873 | 85.16% | 0.010000 |

| 7 | 250 | 2.09 | 0.4310 | 89.84% | 0.010000 |

| 8 | 300 | 2.52 | 0.3524 | 90.63% | 0.010000 |

| 10 | 350 | 2.94 | 0.2313 | 96.88% | 0.010000 |

| 11 | 400 | 3.36 | 0.2115 | 94.53% | 0.010000 |

| 12 | 450 | 3.78 | 0.1681 | 96.88% | 0.010000 |

| 14 | 500 | 4.21 | 0.1171 | 100.00% | 0.010000 |

| 15 | 550 | 4.64 | 0.0920 | 99.22% | 0.010000 |

| 16 | 600 | 5.06 | 0.1015 | 99.22% | 0.010000 |

| 18 | 650 | 5.49 | 0.0682 | 98.44% | 0.010000 |

| 19 | 700 | 5.92 | 0.0927 | 99.22% | 0.010000 |

| 20 | 750 | 6.35 | 0.0685 | 98.44% | 0.010000 |

| 22 | 800 | 6.77 | 0.0496 | 99.22% | 0.010000 |

| 23 | 850 | 7.20 | 0.0483 | 99.22% | 0.010000 |

| 24 | 900 | 7.64 | 0.0492 | 99.22% | 0.010000 |

| 25 | 950 | 8.06 | 0.0390 | 100.00% | 0.010000 |

| 27 | 1000 | 8.49 | 0.0315 | 100.00% | 0.010000 |

| 28 | 1050 | 8.92 | 0.0187 | 100.00% | 0.010000 |

| 29 | 1100 | 9.35 | 0.0338 | 100.00% | 0.010000 |

|=========================================================================================|

Run the trained network on a test set and predict the scores.

[XTest,TTest]= digitTest4DArrayData;

YTestPred = predict(net,XTest);

predict , by default, uses a CUDA-enabled GPU with compute ccapability 3.0, when available. You can also choose to run predict on a CPU using the ‘ExecutionEnvironment’,‘cpu’ name-value pair argument.

Display the first 10 images in the test data and compare to the predictions from predict .

TTest(1:10,:)

ans =

0

0

0

0

0

0

0

0

0

0

YTestPred(1:10,:)

ans =

10×10 single matrix

Columns 1 through 7

1.0000 0.0000 0.0000 0.0000 0.0000 0.0000 0.0000

1.0000 0.0000 0.0000 0.0000 0.0000 0.0000 0.0000

0.9998 0.0000 0.0000 0.0000 0.0001 0.0000 0.0000

0.9981 0.0000 0.0005 0.0000 0.0000 0.0000 0.0000

0.9898 0.0032 0.0037 0.0000 0.0000 0.0000 0.0002

0.9987 0.0000 0.0013 0.0000 0.0000 0.0000 0.0000

1.0000 0.0000 0.0000 0.0000 0.0000 0.0000 0.0000

0.9922 0.0000 0.0000 0.0000 0.0000 0.0000 0.0000

0.9930 0.0000 0.0000 0.0000 0.0000 0.0000 0.0000

0.9846 0.0000 0.0000 0.0000 0.0000 0.0000 0.0080

Columns 8 through 10

0.0000 0.0000 0.0000

0.0000 0.0000 0.0000

0.0000 0.0000 0.0001

0.0000 0.0011 0.0004

0.0015 0.0014 0.0001

0.0000 0.0000 0.0000

0.0000 0.0000 0.0000

0.0000 0.0000 0.0078

0.0000 0.0000 0.0070

0.0000 0.0000 0.0073

TTest contains the digits corresponding to the images in XTest . The columns of YTestPred contain predict ’s estimation of a probability that an image contains a particular digit. That is, the first column contains the probability estimate that the given image is digit 0, the second column contains the probability estimate that the image is digit 1, the third column contains the probability estimate that the image is digit 2, and so on. You can see that predict ’s estimation of probabilities for the correct digits are almost 1 and the probability for any other digit is almost 0. predict correctly estimates the first 10 observations as digit 0.

Syntax

[Ypred,scores] = classify(net,X)

[Ypred,scores] = classify(net,X,Name,Value)

Description

[ Ypred , scores ] = classify( net , X ) estimates the classes for the data in X using the trained network, net .

[ Ypred , scores ] = classify( net , X , Name,Value ) estimates the classes with the additional option specified by the Name,Value pair argument.

Examples: Classify Images Using Trained ConvNet

NOTE: Training a convolutional neural network requires Parallel Computing Toolbox™ and a CUDA®-enabled NVIDIA® GPU with compute capability 3.0 or higher.

Load the sample data.

[XTrain,TTrain] = digitTrain4DArrayData;

digitTrain4DArrayData loads the digit training set as 4-D array data. XTrain is a 28-by-28-by-1-by-4940 array, where 28 is the height and 28 is the width of the images. 1 is the number of channels and 4940 is the number of synthetic images of handwritten digits. TTrain is a categorical vector containing the labels for each observation.

Construct the convolutional neural network architecture.

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,20);

reluLayer();

maxPooling2dLayer(2,‘Stride’,2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

Set the options to default settings for the stochastic gradient descent with momentum.

options = trainingOptions(‘sgdm’);

Train the network.

rng(‘default’)

net = trainNetwork(XTrain,TTrain,layers,options);

|=========================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning|

| | | (seconds) | Loss | Accuracy | Rate |

|=========================================================================================|

| 2 | 50 | 0.42 | 2.2301 | 47.66% | 0.010000 |

| 3 | 100 | 0.83 | 0.9880 | 75.00% | 0.010000 |

| 4 | 150 | 1.24 | 0.5558 | 82.03% | 0.010000 |

| 6 | 200 | 1.66 | 0.4023 | 89.06% | 0.010000 |

| 7 | 250 | 2.08 | 0.3750 | 88.28% | 0.010000 |

| 8 | 300 | 2.50 | 0.3368 | 91.41% | 0.010000 |

| 10 | 350 | 2.93 | 0.2589 | 96.09% | 0.010000 |

| 11 | 400 | 3.35 | 0.1396 | 98.44% | 0.010000 |

| 12 | 450 | 3.77 | 0.1802 | 96.09% | 0.010000 |

| 14 | 500 | 4.19 | 0.0892 | 99.22% | 0.010000 |

| 15 | 550 | 4.62 | 0.1221 | 96.88% | 0.010000 |

| 16 | 600 | 5.05 | 0.0961 | 98.44% | 0.010000 |

| 18 | 650 | 5.48 | 0.0857 | 99.22% | 0.010000 |

| 19 | 700 | 5.90 | 0.0651 | 100.00% | 0.010000 |

| 20 | 750 | 6.33 | 0.0582 | 98.44% | 0.010000 |

| 22 | 800 | 6.76 | 0.0808 | 98.44% | 0.010000 |

| 23 | 850 | 7.19 | 0.0521 | 99.22% | 0.010000 |

| 24 | 900 | 7.61 | 0.0248 | 100.00% | 0.010000 |

| 25 | 950 | 8.03 | 0.0241 | 100.00% | 0.010000 |

| 27 | 1000 | 8.46 | 0.0253 | 100.00% | 0.010000 |

| 28 | 1050 | 8.88 | 0.0260 | 100.00% | 0.010000 |

| 29 | 1100 | 9.31 | 0.0246 | 100.00% | 0.010000 |

|=========================================================================================|

Run the trained network on a test set.

[XTest,TTest]= digitTest4DArrayData;

YTestPred = classify(net,XTest);

Display the first 10 images in the test data and compare to the classification from classify .

[TTest(1:10,:) YTestPred(1:10,:)]

ans =

0 0

0 0

0 0

0 0

0 0

0 0

0 0

0 0

0 0

0 0

The results from classify match the true digits for the first ten images.

Calculate the accuracy over all test data.

accuracy = sum(YTestPred == TTest)/numel(TTest)

accuracy =

0.9929

DEEP LEARNING WITH MATLAB: CONVOLUTIONAL Neural NetworkS. CLASSES

Convolution neural networks (CNNs or ConvNets) are essential tools for deep learning, and are especially suited for image recognition. You can construct a CNN architecture, train a network, and use the trained network to predict class labels. You can also extract features from a pre-trained network, and use these features to train a linear classifier. Neural Network Toolbox also enables you to perform transfer learning; that is, retrain the last fully connected layer of an existing CNN on new data.

MATLAB has the following classes:

Description

Network layer class containing the layer information. Each layer in the architecture of a convolutional neural network is of Layer class.

Construction

To define the architecture of a convolutional neural network, create a vector of layers directly.

Copy Semantics

Value. To learn how value classes affect copy operations, see the following paragraphs:

Two Copy Behaviors

There are two fundamental kinds of MATLAB ® objects — handles and values.

Value objects behave like MATLAB fundamental types with respect to copy operations. Copies are independent values. Operations that you perform on one object do not affect copies of that object.

Handle objects are referenced by their handle variable. Copies of the handle variable refer to the same object. Operations that you perform on a handle object are visible from all handle variables that reference that object.

Value Object Copy Behavior

MATLAB numeric variables are value objects. For example, when you copy a to the variable b , both variables are independent of each other. Changing the value of a does not change the value of b :

a = 8;

b = a;

Now reassign a . b is unchanged:

a = 6;

b

b =

8

Clearing a does not affect b :

clear a

b

b =

8

Value Object Properties

The copy behavior of values stored as properties in value objects is the same as numeric variables. For example, suppose vobj1 is a value object with property a :

vobj1.a = 8;